Software

Printerface

The first thing I worked on was getting the printer to work. The entire project hinged on being able to print images on a printer designed for text and simple logos, so I wanted to evaluate that aspect of the printer before getting too involved.

I picked up the printer from Adafruit, and tried out their Arduino library. The library includes code to print text in different fonts, barcodes, QR codes, and bitmap images.

Their example printed a small Adafruit logo, so I hijacked that portion of the code and inserted a “grayscale” image.

This printer is not capable of printing anything but black or white pixels. The best way to give the illusion of grayscale is to “dither”. In image processing, dithering is a method of giving something the appearance of grayscale by alternating black and white. I’ll go into details later about how this is done, but for my early demo, I used the application HyperDither for OSX.

It took this:

And produced this:

(Note that when resized, dithered images can have super strange artifacts. If the image above looks weird, make sure you’re viewing it at native resolution on your device.)

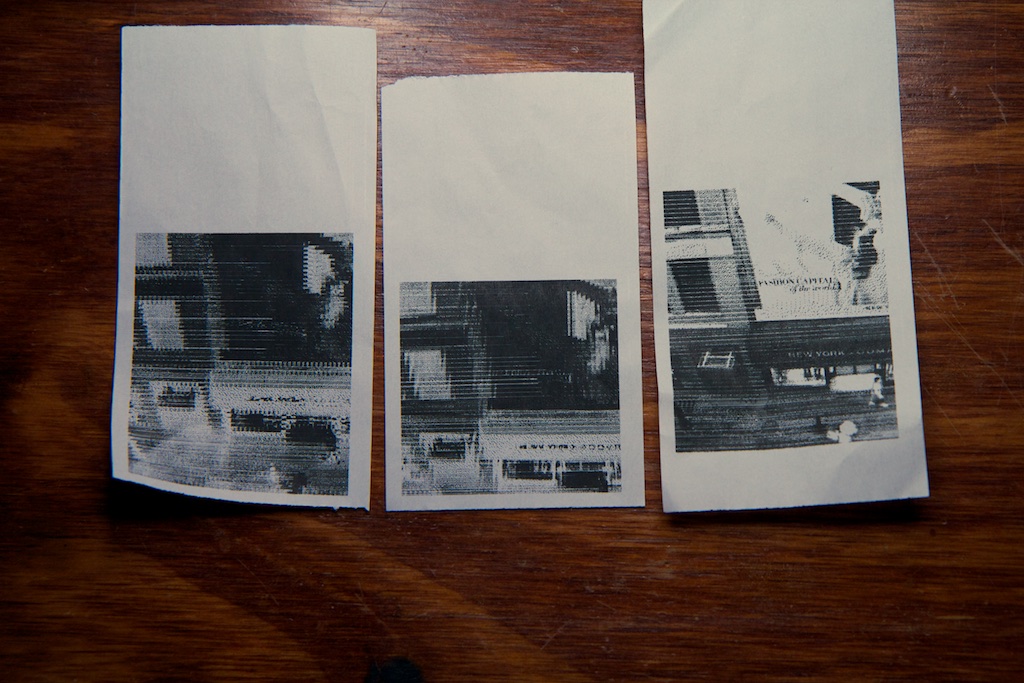

When I inserted this into the Arduino code, the printer made this:

Though it’s wasn’t great, it was definitely passable. The images all end up with horizontal bars across them, but the printer is actually pretty darn precise.

By the way, one of the best parts about working on a paper printer is that you get an automatic catalog of every step of the process. I went through about 4 rolls of “film” getting everything to work:

JPEG

The next big challenge (or maybe the only big challenge) was getting my firmware to decode JPEG.

The camera module from Adafruit is a little weird. I get the feeling that it’s not really meant for still images. For starters, it has a coaxial video output that allows you to get a live video feed. I’ve never hooked this up to anything, but I think still images work by first freezing the video (buffering the current frame) and then compressing that frame and transmitting it over a UART.

While JPEG compression is a bit of a pain to work with when compared to straight bitmap, it can substantially reduce transmission times. The camera’s 640×480 images usually come out to around 40-50kB. An uncompressed version with 8 bits of color per channel would be close to a megabyte and take 20 times longer to transmit.

The JPEG algorithm is based on both mathematical and physiological shortcuts. It is not a lossless format, so the image reproduced is not the same as the image going in. The hope is that the changes are subtle enough that a human viewer won’t notice the difference. For example, humans are more perceptive of small changes in brightness over small changes in color, so color information is more highly compressed.

Because I only needed brightness information (grayscale), I was hoping to find a way to optimize my decompression to ignore color info and possibly decode faster. Digging into the JPEG decompression algorithm, I pretty quickly realized that I was not going to be writing my own decoder (similar to how I felt with the FATFS for the Gutenberg clock).

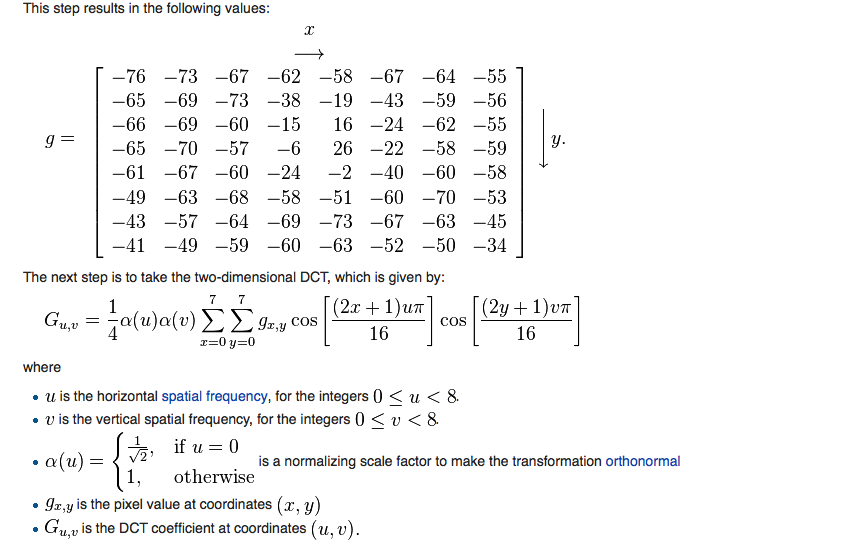

This crap is complicated.

There are a ton of JPEG decoders available including one from the Independent JPEG Group who released their first version in October of 1991, but few of them are deigned for small embedded devices. With just 64k of memory, many of them won’t work on my processor, and some even include calls to the operating system while my camera has no OS.

I found PicoJPEG which is a barebones JPEG decompression tool that can run on limited hardware and doesn’t require any hooks into an OS.

To begin testing this code, I needed an image to work with. I grabbed one of the test images on Adafruit’s site and wrote a simple Python script that just takes in the JPEG file and spits out its raw contents in an array that can be copied to a C file.

The STM32F407 on the Discovery board has 1MB of flash and 192KB of memory, so a 47,040 byte array was no trouble.

Getting this code to work was surprisingly easy. The software is written to run with a filesystem, so there is a function called “jpeg_need_bytes_callback()” which is supposed to grab the next chunk of compressed data from the filesystem to continue processing. This way, there’s never a need to keep the entire image in memory.

All I had to do was modify this function to pull bytes from my array instead of the filesystem, and it happily ran to completion with no errors. Of course, I had no way to actually view the image yet which was my next task.

Image Processing

Grayscale

The first step to getting a full color image through a black and white printer is to convert it to grayscale.

Image data is decoded in 8×8 pixel blocks from left to right top to bottom. When one of these blocks comes in, PicoJPEG produces three arrays that contain the red, green, and blue pixels values of all of the pixels in that block.

const uint8_t *pSrcR = image_info.m_pMCUBufR + src_ofs; const uint8_t *pSrcG = image_info.m_pMCUBufG + src_ofs; const uint8_t *pSrcB = image_info.m_pMCUBufB + src_ofs;

The task is then to pair these down into a single array that contains just grayscale information. But which of the three do you use? The green portion of an image will look very different from the red or blue portion. My first guess was to just average the three values, but I learned that this isn’t right either.

Properly converting RGB to grayscale involves weighting each color channel in accordance to how sensitive your eye is to that color. Human eyes are very insensitive to blue, so you want to gain down blue’s contribution to the grayscale image.

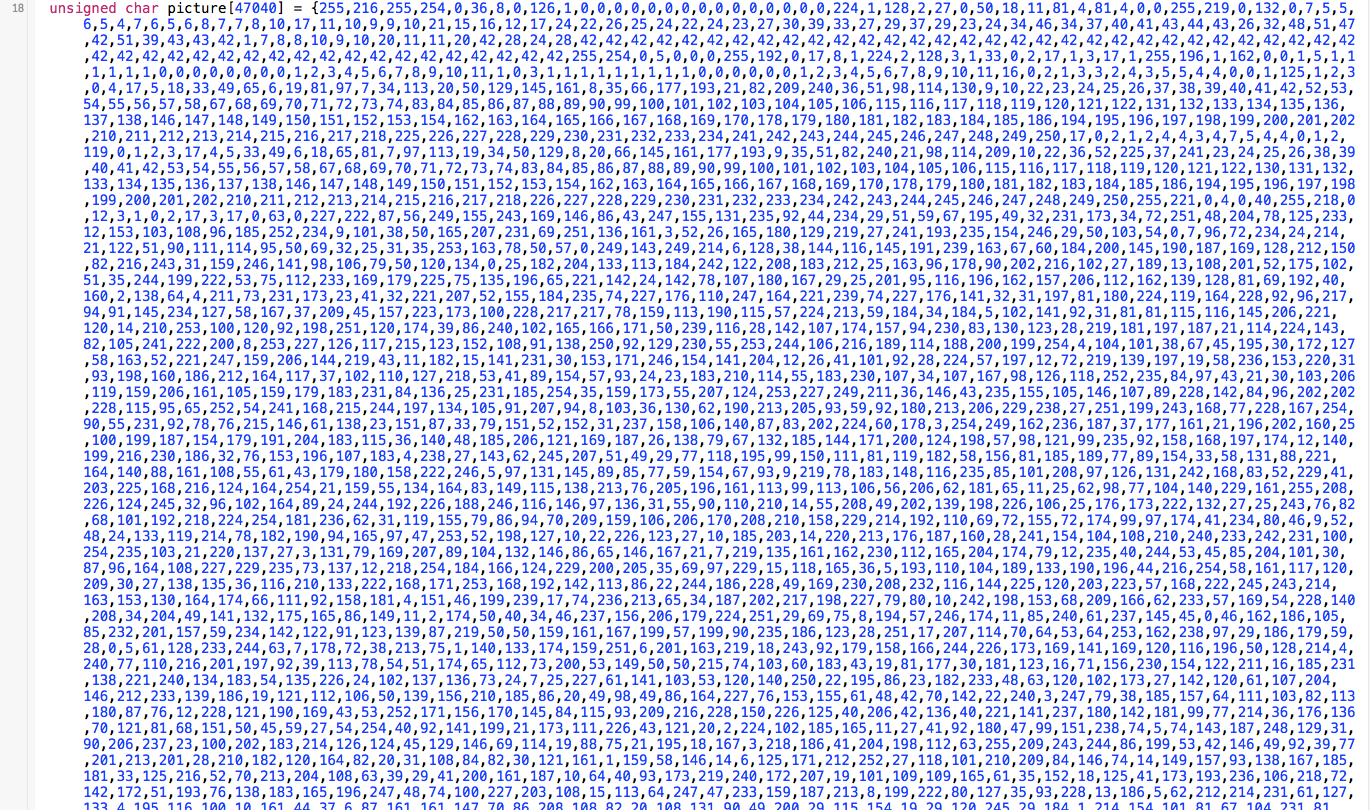

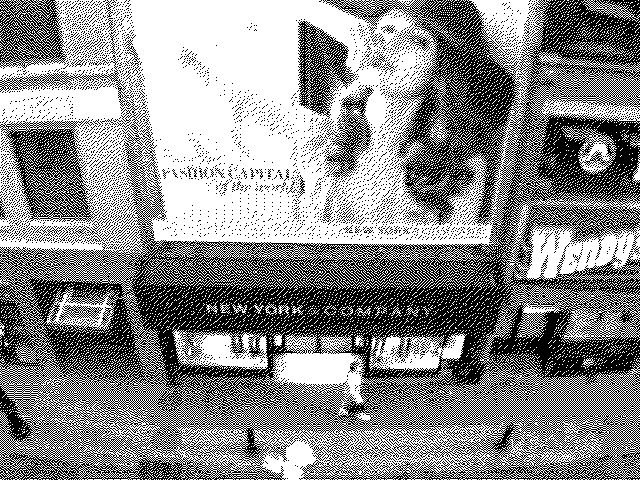

Here’s a version of the test image using two different algorithms.

Original

Even Red Green Blue contribution:

Scaled Red Green Blue contribution:

As you can see, the first grayscale image looks weird. For example, the lettering in “Wendy’s” doesn’t stand out as much as it should.

Looking around online, I found the numbers 72% green, 21% red and 7% blue, so I wrote a loop that stepped through each pixel of the 8×8 block and performed this grayscale conversion:

ditheredImage[i][j] = *(pSrcR++)*210 + *(pSrcG++)*720 + *(pSrcB++)*70;

You’ll notice that I used “210” when I should have used “0.21”. The STM32F105 does not have a float processing unit which means that it is super slow when performing math on decimal numbers. Rather than using floating precision, I opted to use fixed precision by just multiplying everything by 1000 and just pretending the decimal place was three spaces over.

As a side note, the STM32F407 does have an FPU, but I got a hard fault every time I tried to enable it. I ended up leaving it disabled using “-mfloat-abi=soft” in the Makefile, but it’s something I want to look into in the future.

Dither

The next step is dithering.

The word “dither” comes from the word “didderen” which means “to tremble”. Its use in computer science dates back to WWII where it was found that the mechanical computers used to aid bomber pilots performed better in the air than on the ground. The explanation for this phenomenon was that the vibration of the airplane prevented the gears inside the computer from getting stuck in an intermediate location. Small perturbations unseated the gears and caused them to shuffle into the correct position.

You might have experienced something like this when measuring out flour. By tapping the side of the measuring cup, you’re introducing noise and forcing the flour to settle into a discrete position.

In the context of image processing, dithering is doing the equivalent by nudging a gray pixel and forcing it to be either white or black. To improve the image quality, the error (how far it was from white or black) is combined with the surrounding pixels nudging them closer to black or white.

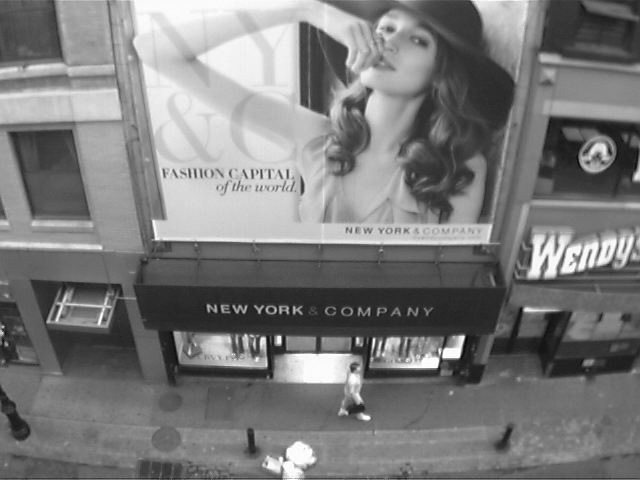

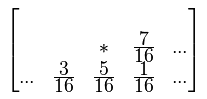

One of the simplest and most commonly used algorithm for this “nudge and pass” method is Floyd-Steinberg dithering. Wikipedia explains it concisely with this image:

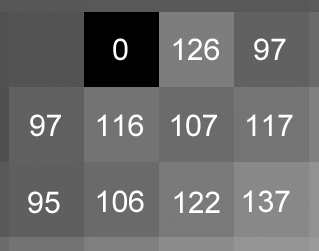

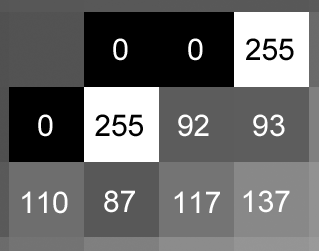

The pixel being nudged is in the asterisk space, and the error is shared with the surrounding pixels according to those proportions. Here’s a quick example on a portion of the test image. The pixel values can be 0 (black) through 255 (white):

Starting near the top left, 86 is closer to 0 than 255, so it is dropped to zero, and the value 86 is multiplied by the fractions in the table above and added to the neighboring pixels:

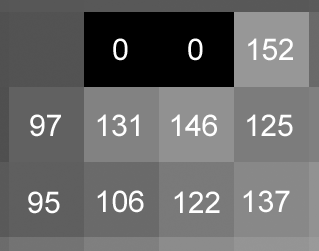

This continues moving to the right:

Since 152 is closer to 255 than 0, it is pushed up to 255, and the remainder (103) is multiplied by the fractions and subtracted from the neighboring pixels:

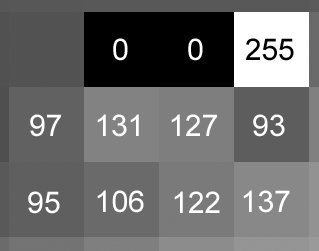

This continues until it reaches the right side of the image where the remainders are ignored and then begins again in the next row:

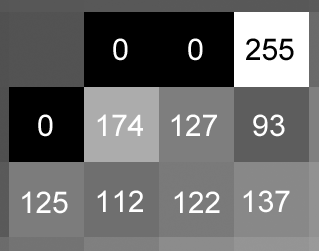

And so on:

You can see how whenever a pixel gets blacked or whitened out, the surrounding pixels get slightly brighter or darker to compensate. In this way, gray is approximated with alternating black and white.

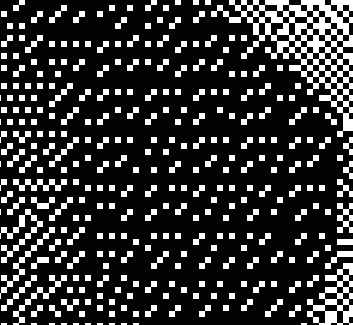

While it’s easy to store a 640×480 pixel black and white image, storing a grayscale version takes up a substantial amount of space. To keep my memory usage down, I processed the image as it came in. My first attempt at this was processing each 8×8 block independently from the others:

This produced visible artifacts at the boundaries of each 8×8 block inside of which there were often repeated patterns.

This is a result of two adjacent blocks being very similar and coming up with similar solutions for the same shade of gray.

To mitigate this problem, I decided to keep track of the eight error values running off the right side of every block and add them to the next block in the row. This produced this image:

While this improved the image, there was still some weirdness in the horizontal direction:

Finally, I added in vertical spill over as well. This was a bit of a pain because the row is 640 pixels long, so it was a lot to keep track of. The results were great though:

I did all of this iterating in Python which made it easy to see my outputs immediately. Once I was happy with the result, I just converted it to C and dropped it into my code.

After a few missteps with color polarity and block orientation, I could print the image!

Rotating

While it was pretty awesome to see the right picture come out of the printer, I immediately noticed a problem. The image is 640 pixels wide, while the printer only has 384 horizontal pixels to work with. This means that I was cropping 40% of the image.

The obvious solution was to rotate the image 90 degrees. This isn’t super hard to do, but it did make for some pretty cool looking pictures while I was figuring out the block orientation:

In the final version, it decompresses and dithers the entire image and then crops off the top and bottom 10% to make it fit.

Memory problems

What you see above was all worked out on the STM32F4 Discovery board. One detail that I absolutely needed to know when picking out a processor was how big the images were. Since I was copying the entire image into RAM before decompressing it, I needed to make sure that I had enough memory to hold the entire JPEG as well as run the main program. JPEG compression rate depends heavily on image content. Images with finer details tend to not compress as well as those that have less texture.

Throughout testing, my images rarely got above 47 or 48k. With this information, I settled on the STM32F105 which has 64k of memory. It felt kind of scary getting that close to 100% full until I realized that the 16k remainder was larger than I’ve had to work with on any of my AVR projects. Even the QR clock only had 1k of memory.

This seemed to work pretty well, but working late at night, I noticed an intermittent issue where my firmware would hard fault and crash. I started blaming the debugger again because sometimes it would succeed if I wasn’t debugging, but there wasn’t much of a pattern to its success rate.

Just to verify, I grabbed my logic analyzer and started tracing the UART data coming from the camera. What I found was pretty devastating.

While most images were 47-48k, every once in a while, I’d get one over 64k. In a dark environment, the camera’s built-in gain controller boosts image brightness in an attempt to resolve more detail with little light. Boosting gain also boosts noise, so the resulting images were very noisy. Noise consists of a bunch of very fine random variations to pixel values, and it doesn’t compress well.

So here I was, having just assembled a custom PCB and figuring out that it wasn’t going to work. To make matters worse, the STM32F105 doesn’t have any other pin-compatible variants with more memory.

Fortunately, I discovered that the camera module stores the currently buffered image indefinitely, and you can request whatever portion of the data you want. The solution was to grab small portions of the image (4096 bytes at a time) and decode on the fly. The plus side was that PicoJPEG is already written to request data from disk, so I just had to swap out that code to request data from the outside device.

The camera module isn’t without its faults however. As a completely undocumented feature, if you ask for a section of the image starting with a byte address that is not divisible by 8, it will deliver bytes starting from the nearest address that is.

The upside is that this fix partially resolved another bug with the camera module. The camera supports a number of different baud rates up to 115200, but I found that when transmitting at those speeds, images would sometimes get corrupt. The funny thing is that since JPEG is a sequential decoding scheme, the camera would successfully print the broken images. They’d just have a bunch of garbage starting somewhere near the bottom.

Looking around online, I found that I’m not the only person who had this problem. This camera is seriously screwy.

Taking a scope trace of the output, everything looks fine, so it’s not an electrical issue. I think it’s just something about the camera’s FPGA. I have two theories:

- Over long transmissions, a poorly calibrated serial clock could lose time and send out a bit slightly out of time.

- The processor on the camera can’t sustain that data rate for so long and eventually has to start managing background tasks which interfere with transmission.

Either way, grabbing the image and processing it in portions both reduces the length of each transmission and provides some “cool down” time between transmissions while the camera is processing.

Other improvements

Gamma

While having a printer camera was cool, I was sort of bummed about the quality of the images I was getting. Large dark sections of the image tended to bleed together. I think it’s a result of the printer body itself heating up and blackening the paper even when the local heater head isn’t active.

I needed a way to generally brighten the image to prevent this from happening. I tried a few things originally like simply taking every pixel value below 10 and rounding it up to 10 or changing the cut off point on the dithering algorithm from 128 to 80 forcing more pixels to be pushed to white.

While these did brighten the image some, they also reduced contrast and made the pictures generally worse.

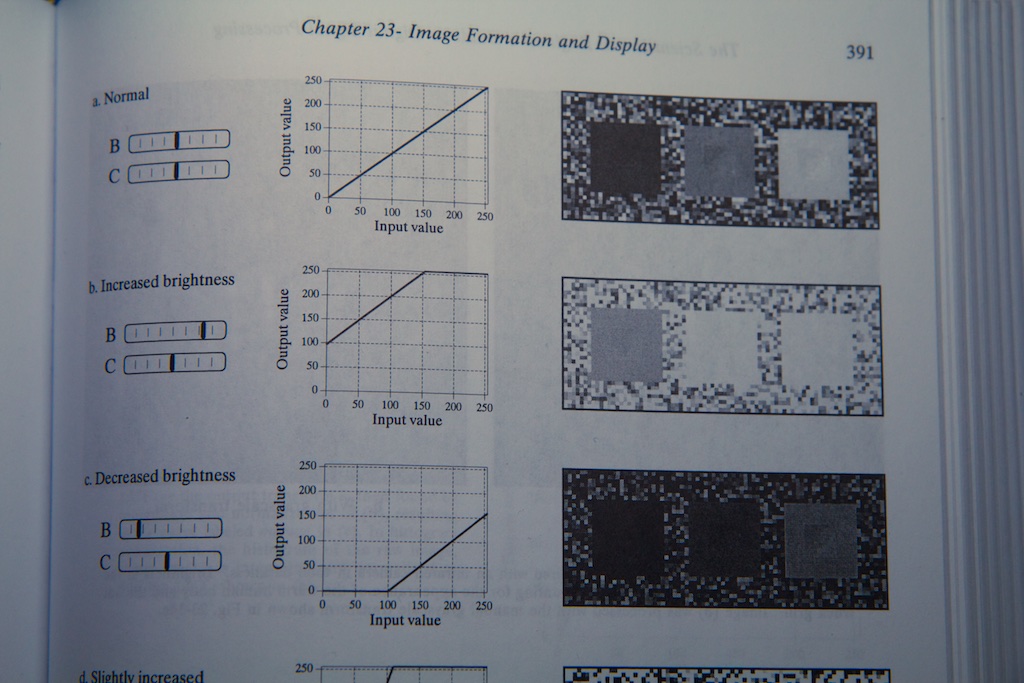

For help, I turned to Digital Signal Processing by Steven W. Smith. On page 391, he describes Gamma curves which are a method for adjusting the brightness and contrast of an image:

The concept is pretty simple. You can make a curve that maps every possible input value (0-255) with an output value. I sort of did this before when I mapped every input value below 10 to 10, but doing it as a curve gives you a much finer level of control.

I needed my gamma curve to allow the image to sometimes dip all the way to black (to maintain contrast), but I wanted to make it “harder” for large portions to be that dark. After playing around for a bit, I came up with this curve:

The curve is stored as a 255 element array where the “input” is the array index of the output value. With this addition, my grayscale function from before looks like this:

ditheredImage[i][j] = (gammaCurve[*(pSrcR++)])*210+(gammaCurve[*(pSrcG++)])*720+(gammaCurve[*(pSrcB++)])*70;

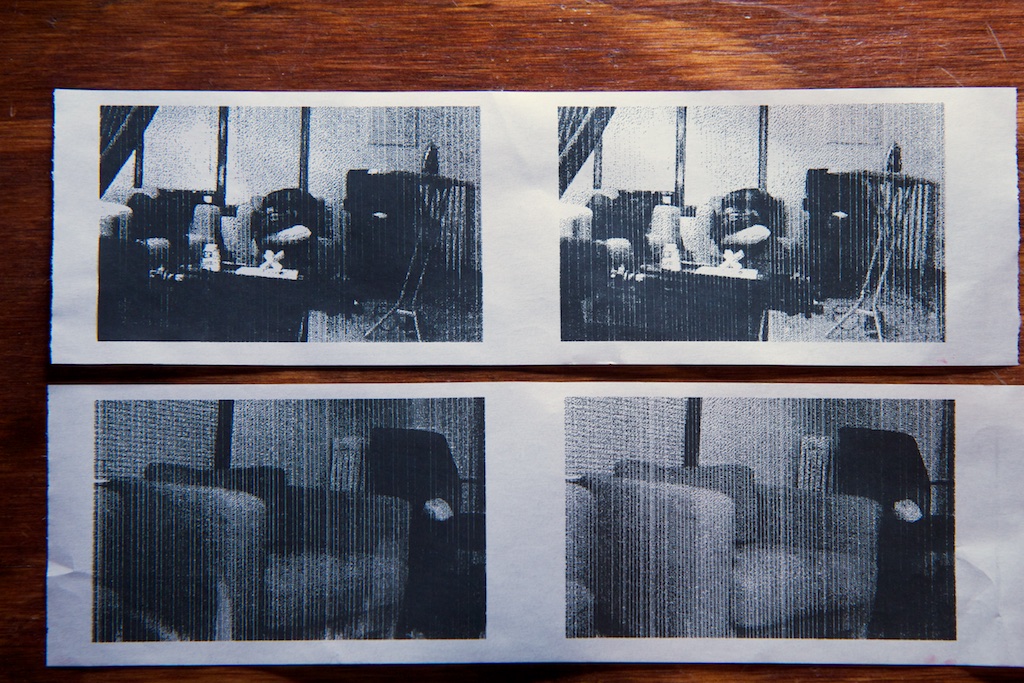

Just to make sure it was working, I wrote a version of the firmware that prints the image twice once using the Gamma curve adjustment and once without. You can see the improvement:

Speed

Despite the “instant” nature of the camera, it was actually pretty slow. The printer itself takes about 18 seconds to spit out full image. While it would be nice to make this faster (I’ll have to call Nordstrom), it’s pretty fun to watch, and I think seeing the image print out in real time is a fun part of the experience.

What isn’t fun is the 10 seconds it takes for the STM32 to grab the image off the camera module and decompress the JPEG. During this time, the camera just sits and blinks an LED. There are probably a lot of things I could do to optimize the JPEG decompression if I had any idea how JPEG actually works, but it’s all moot anyway because the camera can only transmit as fast as 115200 baud which means that it will take a minimum of 4.5 seconds just to grab the 50k or so bytes of the image. This is super slow and yet another reason why I think the camera module isn’t really meant for still pictures.

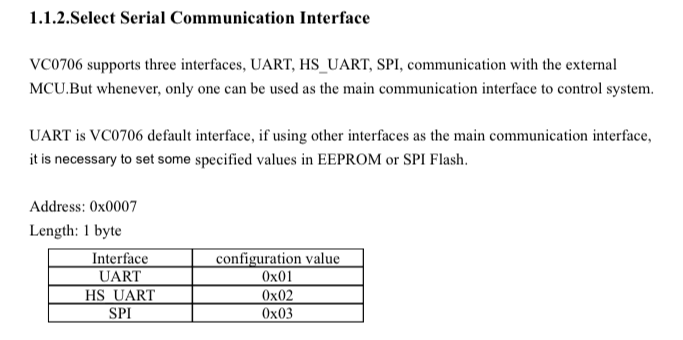

The data sheet mentions a “High Speed UART” interface that can apparently support up to 921.6kbps:

But the pins aren’t brought out to anything that I can solder to easily, and even if they were, the device needs to have its EEPROM programmed to select the HS UART for this to work:

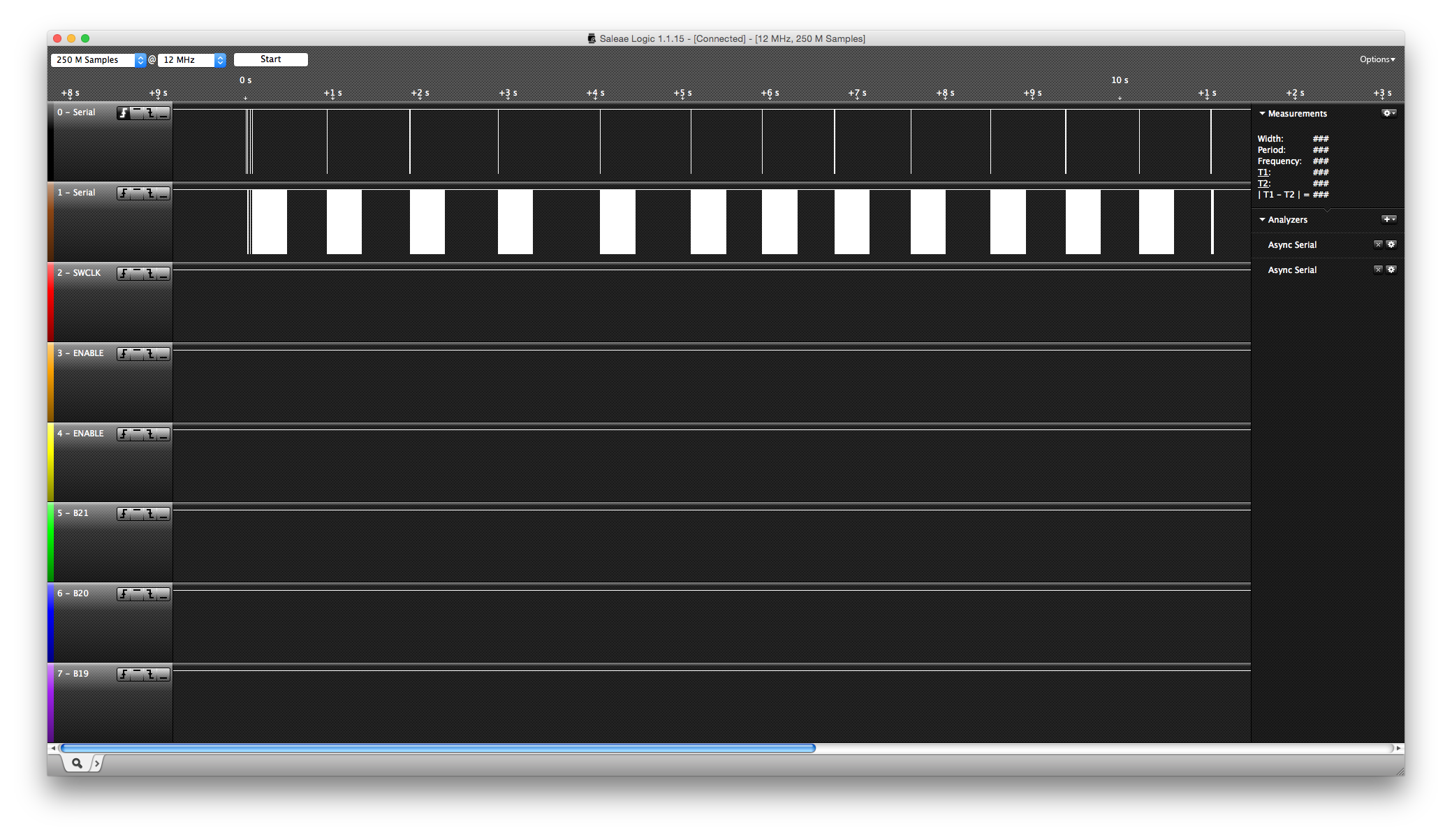

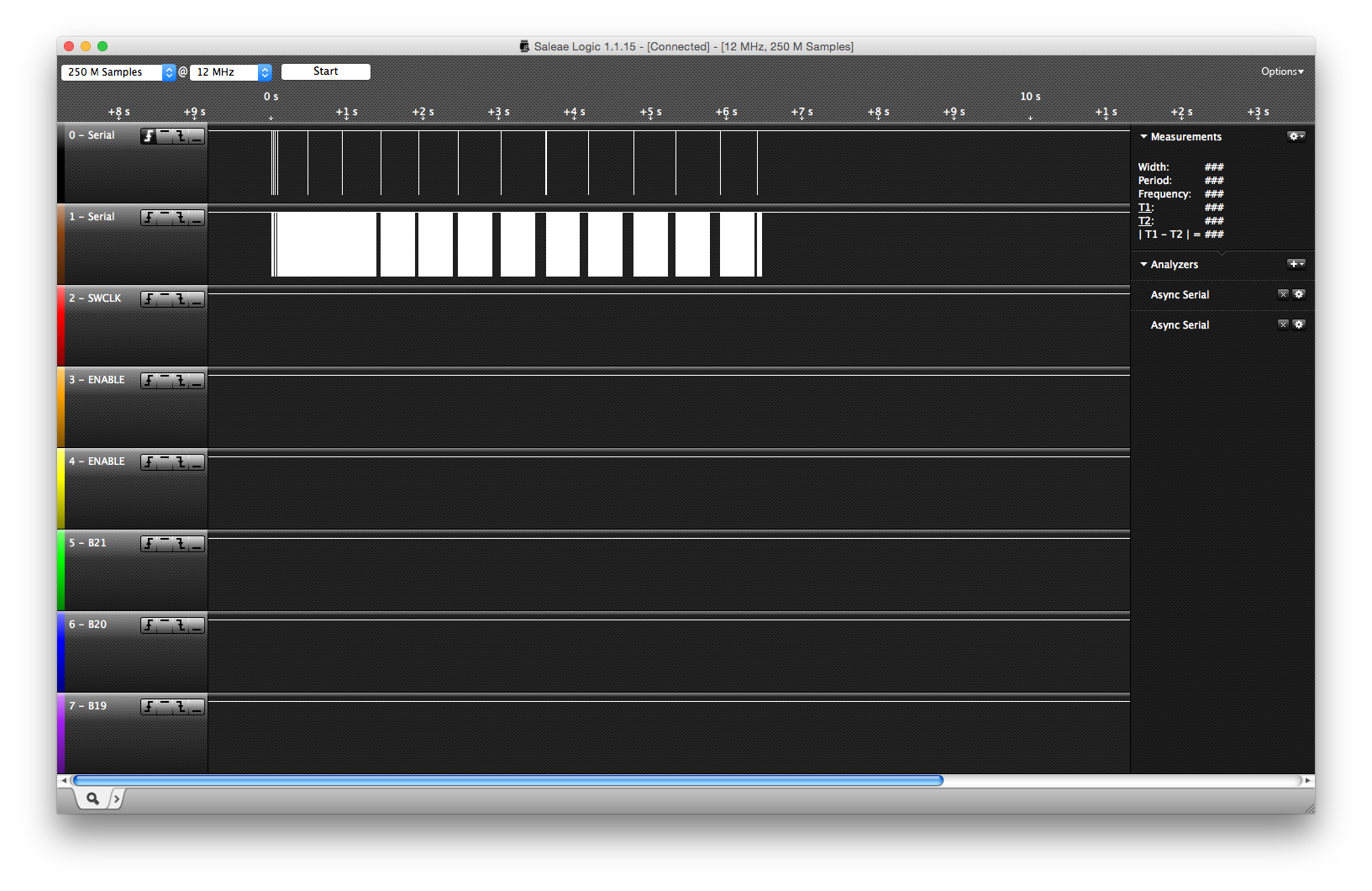

So if I can’t make the camera transmit any faster, can I transmit and decompress at the same time? A signal trace of the camera’s serial output looks like this:

The white blocks are periods where data is being transmitted and the gaps are when the processor is decoding.

There are two ways I can think to make this work. The first is to handle data reception via interrupt. Similar to the AVR, the STM32 can be set to trigger an interrupt when it receives a byte of data over serial UART. The processor can then pause whatever it’s doing, go grab the new data, and then resume.

While that’s pretty cool, it actually cuts into my processing time as it takes a little bit of time to switch tasks like that. A much better solution utilizes a feature you won’t find on an AVR: the DMA.

DMA or “Direct Memory Access” is a component built into some processors that allows applications to directly interface memory with an external port without tying up any processor resources. With a DMA in place, I could have the processor fill up a buffer with image data while it’s processing another buffer from before.

This wasn’t super trivial as it involved managing two separate buffers and also dealing with all of the weirdness of the “address not divisible by 8” rule of the camera. Furthermore, I had to make the code smart enough to know when it’s time to switch buffers while still providing a continuous stream of data to the decoder.

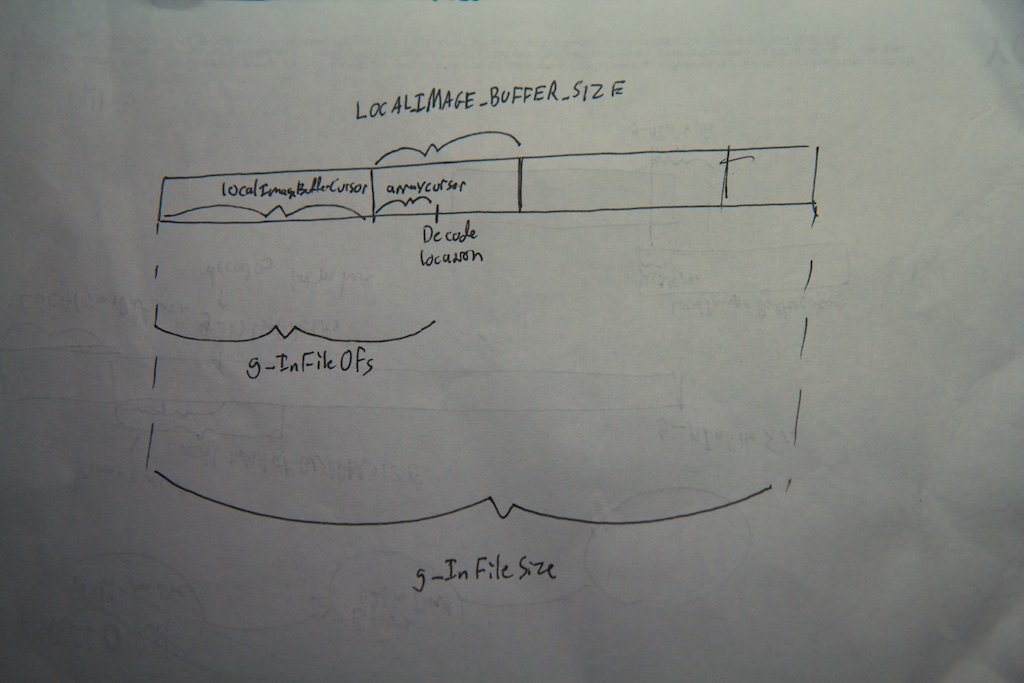

What I came up with involved a lot of trial and error as well as a pretty neat diagram drawn on the back of a packing slip from Newark:

With all of that in place, my image capture time dropped from around 11 seconds to just 6.5:

As you can see, the data transmission sections are much more tightly packed. While there are still some gaps that could be shrunk, there’s only about a seconds worth of speed improvement left before you start running into the limitations of the UART. Unless something jumps out at me looking for optimization, I’m going to stick with this code.

Table of Contents

Pingback: Towards More Interesting Instant Cameras | Hackaday

Pingback: Towards More Interesting Instant Cameras - Tech key | Techzone | Tech data

Pingback: Towards More Interesting Instant Cameras | Ad Pub

Pingback: Towards More Interesting Instant Cameras | Hack The Planet

Pingback: Towards More Interesting Instant Cameras | 0-HACK

Thoughts about heading toward a KS project:

1. Separate the battery/charger from the camera/printer: Support attached and/or detached/cabled power, with standard LiPO standard and the option of a pack with replaceable/rechargeable NiMH cells (dirt cheap). Then, also offer the camera without a battery pack (DIY power is relatively easy if the interface is simple).

2. Personally, I’d want a clear case with all the internals visible, using standoffs and 3D-printed brackets where needed. Hopefully, this would also be the simplest/cheapest case.

3. Kit the custom parts and include a simple “no-solder” BOM for the rest. This permits a “partial gift” for the makers among us. And I certainly don’t mind giving Adafruit some business, especially if a small discount can be negotiated for this project’s BOM.

I think the above can provide a wider range of KS options and price levels with (hopefully) relatively little change to the core device.

Pingback: PrintSnap Wants to Bring Back the Instant Picture Using Dirt Cheap Receipt Paper

Hey there, I think this might interest you: http://www.sciencedaily.com/releases/2014/10/141022143628.htm

Yep, I’m well aware of that problem. I wash my hands compulsively anyway, and I’m sure someone will come around with a BPA-free option soon in response to public outcry. The same thing happened with Nalgene and leaded gasoline.

I want this! I want this soooo bad. Please sell me the first one!

Pingback: PrintSnap Wants to Bring Back the Instant Picture Using Dirt Cheap Receipt Paper - news from Allwebsolutions.net

When this becomes available, make sure it can be sent to Australia!!!!

Pingback: l’appareil photo instantanée le plus économique | news-apple

Hi Ch00f,

Your PA15 pin isn’t busted; the problem is that it is by default mapped as a JTAG debug pin and not to the GPIO block. In the device datasheet (the 100-page thing, NOT the 1000-page reference manual), there will be a “pin definitions” table near the front containing pin numbers for all the different package options, pin names (“PA15”), pin-types (“I/O”) and 5V-tolerance (“FT”), a “Main Function” column (this is the important part) and “Alternate Function” columns.

Note that for most GPIOs, the Main Function is GPIO, i.e. at power-on, PC12 is PC12, etc. However at power-on, PA15 is JTDI (jtag data in). This is necessary so that JTAG can start talking to a device when it boots. If you’re using SWD (and it seems you are) and want to make use of the PA15 pin, you need to disable the JTAG peripheral and its pin remapping.

Note that if you don’t want to be able to debug or maybe ever load code into the device again, you could also free up PA13 and PA14 which are the SWD pins.

Email me if you get stuck and I can send you the specific line of code you need; I just don’t have it here right now.

(I found this one out the hard way while playing with an stm32; I wasted a good 4 hours figuring it out)

THANK YOU

I figured it was something like that. I mentioned that part specifically hoping someone would figure it out for me.

Thanks for the tip!

Pingback: Prints for less than penny

Pingback: PrintSnap: A Home-Built Camera That Prints on Receipt Paper (and Almost for Free)

Pingback: The Quirky PrintSnap Instant Camera Let's You Create Instant Prints On The Cheap - DIY Photography

You are a silly man with silly ideas. As soon as I started the video I thought, “Why is he recreating the GameBoy Camera/Printer?” Your presentation was mockingly beautiful. It’s good to see your personal projects continue to be so whimsical.

What’s nice about the Cortex series is that some of the “peripherals” are standardized by ARM, so no matter the manufacturer, they’ll all behave the same. SysTick, FPU, NVIC, etc.

To enable the FPU, google “cortex m4 enable fpu” and you’ll find the following asm to do so (basically setting a couple bits to turn on the coprocessors in the coprocessor access control register):

; CPACR is located at address 0xE000ED88

LDR.W R0, =0xE000ED88

; Read CPACR

LDR R1, [R0]

; Set bits 20-23 to enable CP10 and CP11 coprocessors

ORR R1, R1, #(0xF << 20)

; Write back the modified value to the CPACR

STR R1, [R0]

I'm actually working on a project right now using a thermal print head, but rather than an all-in-one module like you have, I am using discrete components: a solenoid to push a kyocera print head onto the paper and a stepper motor to drive the paper through. I can print a 1450×672 px image in 3-5 seconds. In my case, going faster actually improves the quality of the print because the print head stays warm.

Pingback: PrintSnap: eine Sofortbildkamera, die Thermopapier statt PolaroidInstant-Film verwendet | Foto[gen]erell

I like this idea, the camera itself is sound for a version 1.0 project. To make it “sellable” you should consider a different housing altogether to make it look like an old school camera. Two ideas I thought are a TLR (like a Rollei) where the user looks down at a mirror to compose the image through a lens, while the camera is in a lens just below it, and the printer goes out the side. Another is a Brownie box camera style with an offset finder and the camera in a central front port. Either way something that looks like an old school film camera from a few feet away would be a winner.

Pingback: Una cámara DIY instantánea

Pingback: Una cámara DIY que hace fotografías instantáneas mas baratas que una Polaroid | Android 3G

Pingback: PrintSnap:用小票做相纸的黑白拍立得相机 | 趣火星

Pingback: PrintSnap Kamera Cetak Instan - Titikfokus

Pingback: Instant Camera Uses Receipt Rolls For Printing Paper - PSFK

Pingback: Instant Camera Uses Receipt Rolls For Printing Paper * The New World

Pingback: This instant camera will print images on a receipt paper roll - Berbit.net

This is fantastic! You basically made a spiritual successor to the King Jim Da Vinci DV55. I really want to see this becoming a real product and hope you go with Kickstarter on this project!

Pingback: This instant camera will print images on a receipt paper roll * The New World

Pingback: This instant camera will print images on a receipt paper roll | xoneta.com - majalah online gadget, komputer dan teknologi terbaru

please keep me posted on all news!

Pingback: PrintSnap, una cámara instantánea con la que imprimir fotografías en el papel de los tickets

Pingback: PrintSnap, una cámara instantánea con la que imprimir fotografías en el papel de los tickets | Fravala.com - Las noticias de tecnología a tu alcance.

Pingback: PrintSnap, una cámara instantánea con la que imprimir fotografías en el papel de los tickets - QuickIdeas

Pingback: PrintSnap, câmera instantânea para imprimir fotos em papel de tickets

Pingback: PrintSnap, una cámara instantánea con la que imprimir fotografías en el papel de los tickets • 25 noticias

Pingback: PrintSnap, a camera which prints the photo instantly | Minionvilla

please add me to your mailing list.

love it!!

i

Pingback: PrintSnap: an Instant Camera, which uses Receipt Paper instead of Polaroid/Instant Film |

I want this. If it’s become product please sell at JAPAN too.

I hope ship to Japan!

I’d like to buy it!

please send me all news about it.

l love it

ほしい!

これは楽しそう!

発売されたら買います 😀

Fantastic ! I love it !

Great! I want to buy one.

Great !!!!!! I want this.

Great!!

It must be goint to be a great tool.

I am really excited about it from now.

I want buy it!

it’s a fantastic invention Really hoping to the real product asap!!

Japan.Yokohama

great‼︎ please add me to your mailing list.

I love it !

Fantastic!

Please let me know your progress of the masterpiece.

soooo geate:)

i want this!!!

PrintSnap is very good.

I like it !

Please add me to your mailing list.

欲しいです!

I like this idea!

It’s fantastic!

Please add me to your mailing list

I remembered a pocket camera of Game Boy!

Wonderful.I wait for a followup!

The thing that is really cool. Commercialization sure if I want to carry one.

コレは面白い!!( ´ ▽ ` )ノ

I like it!

I WANT CAMERA!

Hi !

I like it !!!!

これは欲しい!

Please sell me the first one!

Very cool! Sell me as soon as possible!

販売したら購入検討したい、

興味があります、

Amazing….Please add me to your mailing list!!!!!

とても楽しそう!

booked

This is really amazing! I really want to have one! please add me in your mailing list.

I can’t wait for this on sale… 😀

cool

Good

I Want to buy this camera

Pls let me know any infos,

Pingback: PrintSnap – レシートの感熱紙にプリントできるインスタントカメラ | トイカメラ。【トイカメラとトイデジの情報】

Great!

I want it.

Pingback: PrintSnap is a Lo-Fi Polaroid that Uses Thermal Printing | Digital Trends

Pingback: PrintSnap instant camera concept uses receipt paper for low-cost solution - news from Allwebsolutions.net

Pingback: PrintSnap instant camera concept uses receipt paper for low-cost solution | TechnoTwitts | TechZone | Technology Updates | Gadgets Reviews

Pingback: PrintSnap instunt camera concep uses receipt pap'r fer loe-cost solushun zano mini drone | T'Redneck Revue

It is a great idea. This would be a great present for my 8 years old daughter who loves to take pictures. PLease make it at an acceptable price for normal people.

Thanks

Pingback: PrintSnap Instant Camera

This product concept is awesome!!

Plz add my name on your list!!

I’d love to buy!

Its fantastic! Let me know when is available!

Pingback: La “Polaroid” più economica di sempre, funziona con gli scontrini (foto)

this would be a hit on kickstarter, its totally geared towards the 20-30 something year old, new and upcoming product loving, crowd that kickstarter brings in. where are you based out of?

Hi ch00f!

We really like your “PrintSnap Instant Camera” project and want to cover it on EEWeb.com for our readers.

EEWeb.com is an online resource community site for Electrical Engineers and I’m sure that your project will be a great addition as an entry on our webpage.

Would it be ok, if we write an article about your project and include some pictures and other relevant materials?

We will give you credit for the project and link back to your site so our readers can learn more about you.

Hope to hear from you soon.

Regards,

Tin

Sure! Go for it. Send me a link when it’s up.

Hi! That’s good to hear. Thank you.

We will update you the moment the project is already live on our site.

I want this!

u got a great idea!

i want it!

I will buy this camera!!!

Please tell me as soon as you launch!

Pingback: Instant Camera, Instant Receipt Paper Pictures / Receipt Rolls Blog

I want this.. Hope you ship it in the Philippines

That is great use of thermal paper roll.haha~

It could be really nice if you can use your code to create something like an app for smartphone who can automatically dither the photo you take and send it to a bluetooth thermal printer !

Pingback: Actualidad: PolaPi es un fantástico homenaje a las Polaroid y está basada en una Raspberry Pi | Compu Vigilancia

Pingback: PolaPi es un fantástico homenaje a las Polaroid y está basada en una Raspberry Pi | Nuevo Titulo |

Pingback: PolaPi es un fantástico homenaje a las Polaroid y está basada en una Raspberry Pi | TecnologiaDigital

Pingback: PolaPi es un fantástico homenaje a las Polaroid y está basada en una Raspberry Pi | Ceo Bolivia

Pingback: PolaPi es un fantástico homenaje a las Polaroid y está basada en una Raspberry Pi - TodoMovil

Pingback: PolaPi es un fantástico homenaje a las Polaroid y está basada en una Raspberry Pi - InformeGeek

Pingback: PolaPi es un fantástico homenaje a las Polaroid y está basada en una Raspberry Pi

Pingback: PolaPi es un fantástico homenaje a las Polaroid y está basada en una Raspberry Pi - ChatNews

Pingback: PolaPi es un fantástico homenaje a las Polaroid y está basada en una Raspberry Pi - FM Centro

Pingback: PolaPi es un fantástico homenaje a las Polaroid y está basada en una Raspberry Pi - Point Fusion

Pingback: PolaPi es un fantástico homenaje a las Polaroid y está basada en una Raspberry Pi | Noticias: TecnoAR - Agencia Diseño Grafico & Web

Pingback: PolaPi es un fantástico homenaje a las Polaroid y está basada en una Raspberry Pi | Dellthus – Noticias

Omg..I love it … I hope it becomes available soon..

U rock!!!

me to 😉

Pingback: Electrolytic capacitors and preserving a family heirloom | ch00ftech Industries

how could i get one ? looks wonderful

Pingback: Las maravillas de Raspberry Pi! – Tara2

Pingback: Animated EVSE | ch00ftech Industries

I think is a great invention alright i never would have come up with that idea ist nice to see other beings like myself that are creative that think outside of the box that are not ordinarily “bookends fir i have made inventions too like a mammoth gunboat whitter folder pocket knife which you cant put in your pocker most of the components are made of wood the spring is made of pernabucco which us primarily made for the use of violin bows because of its incredible inert retention

Ability the only metal is the blade itself from a barbecue cutlery set that the handle

Rotted and I revamped the blade. Total length is approx a foot and a half Ebony Gabon is used for the stationary retainer and pivot pin I hope i did noi talk your ear off ps. Ive been meaning to contact case cutlery because they are ineed of engineers and a friend up the street also is creative in this field he is very adequate when it comes to tolerances he helped me with ideas in design just by his influence and knowledge.

buyc4nmd

Adriana want to play with you! Start Play: http://inx.lv/GkWu?h=232b90475fdf0370aff47b90c32afc97-

All the girls from next door are here with their cams! Visit Cam: http://inx.lv/GkWu?h=87f2146818a32dde5b520da579bff5e5-

i0sng2

d2yxk7

mm9fxk

ltecxy

yfy729

wzhihc

xp93r9

wasxgk

Pingback: Medicine Rehab Center – How To Choose The Best One – Scrum Leads

ccdgik

fl9ypr

xyvfmz

wmivkp

6f03jc

jwhpmv

bnc440

4anxbp

7suh3h

6ya14k

lgxwbh

oktb3c

65q5tb

ejn76b

4jfgi1

mwzn56

aepe1z

ygq5w0

29p707

zxkt75

pwdpr3

cybyz4

hp06lu

7l5svh

jjczop

ybxp2h

tzq6mb

6qd6mu

9s41rm

0vhbos

xtu40i

ypor5s

l8wlfz

wxo2u3

891g1d

6o9440

00oxl5